|

PhD Computer Science at UT Austin, advised by Yuke Zhu; research intern at Nvidia GEAR (Generalist Embodied Agent Research) Lab. Previously, MS Computer Science at Stanford, advised by Jeannette Bohg; EECS at UC Berkeley. I did research at Waabi, advised by Raquel Urtasun, and at Berkeley Artificial Intelligence Research with members of Pieter Abbeel's Robot Learning Lab. I also did research with Somil Bansal. If you're interested in collaborating or getting into robotics, feel free to reach out! Email / LinkedIn / Github / Google Scholar |

|

|

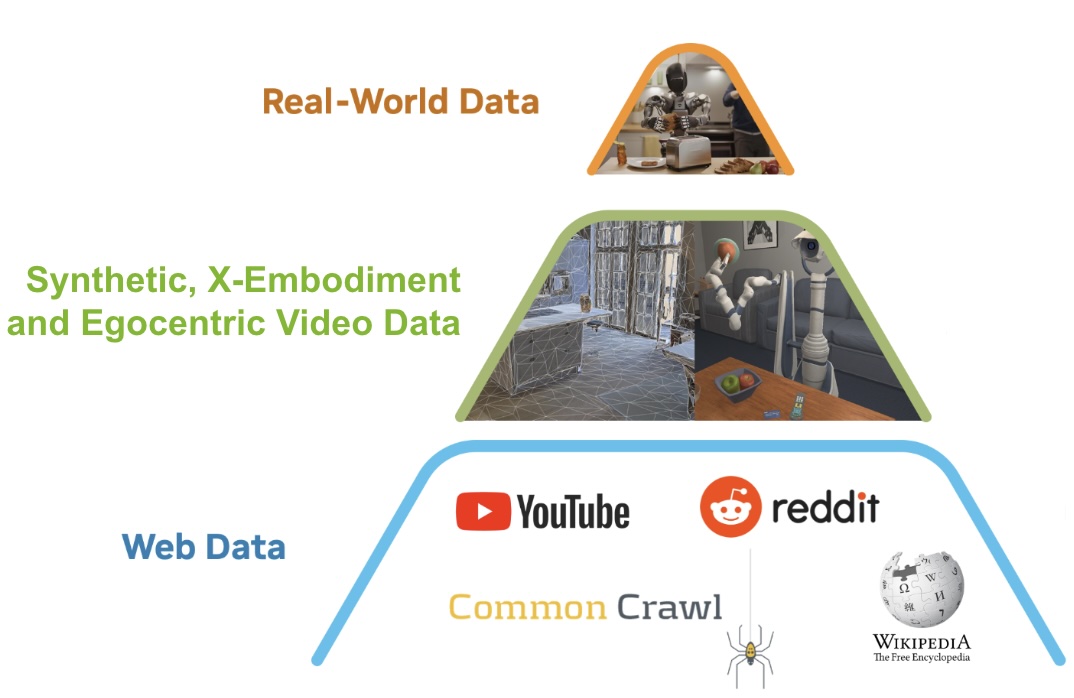

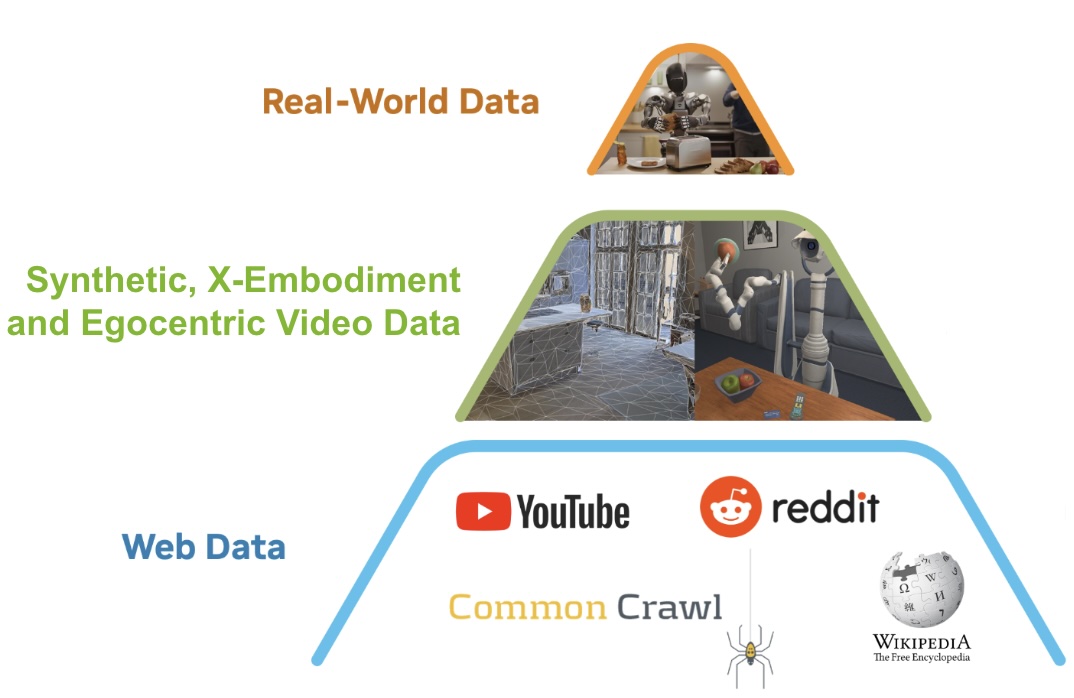

I'm interested in developing general purpose robots. Previously, I have leveraged foundation models for robust and verified long horizon planning for robot manipulation from natural language instructions. Currently, I'm developing a general purpose robotic manipulation framework to enable high reliability on tasks when provided a few demonstrations. In the future, I aim to develop a general purpose robotics foundation model that can be finetuned for specific robot embodiments and settings. I plan to achieve this goal by leveraging a diverse range of data sources --- real world robot data, simulation data, human videos --- and using methods from machine learning, vision, graphics, and robotics. |

|

|

Kevin Lin, Varun Ragunath*, Andrew McAlinden*, Aaditya Prasad, Jimmy Wu, Yuke Zhu, Jeannette Bohg Conference on Robot Learning, 2025 We present CP-Gen, a synthetic data generation framework that uses a single expert trajectory to generate 1000s of robot demonstrations containing novel object geometries and poses. These generated demonstrations are used to train closed-loop visuomotor policies that transfer zero-shot from simulation to the real world. |

|

NVIDIA GR00T Team Technical Report, 2025 We introduce GR00T N1, an open foundation model for humanoid robots. This Vision-Language-Action (VLA) model features an end-to-end dual-system architecture: the vision-language module (System 2) interprets the environment, while the diffusion transformer (System 1) generates real-time motor actions. GR00T N1 outperforms state-of-the-art imitation learning baselines on standard simulation benchmarks across multiple robot embodiments. |

|

Yuke Zhu, Josiah Wong, Ajay Mandlekar, Roberto Martín-Martín, Abhishek Joshi, Kevin Lin, Abhiram Maddukuri, Soroush Nasiriany, Yifeng Zhu Technical Report, 2025 robosuite is a modular simulation framework and benchmark for robot learning, providing a rich set of environments for manipulation research with various robot arms and grippers. |

|

|

Kevin Lin*, Zhenyu Jiang*, Yuqi Xie*, Zhenjia Xu, Weikang Wan, Ajay Mandlekar†, Jim Fan†, Yuke Zhu† International Conference on Robotics and Automation, 2025 We introduce DexMimicGen, a large-scale automated data generation system that synthesizes trajectories from a handful of human demonstrations for humanoid robots with dexterous hands. We leverage DexMimicGen to generate data and deploy a trained policy on a real-world humanoid can sorting task. |

|

|

Aaditya Prasad, Kevin Lin, Linqi Zhou, Jeannette Bohg Robotics Science and Systems, 2024 We propose Consistency Policy, a faster and similarly powerful alternative to Diffusion Policy for learning visuomotor robotic manipulation policies. We compare Consistency Policy with Diffusion Policy and other related speed-up methods across 6 simulation tasks as well as one real-world task where we demonstrate inference on a laptop GPU. For all these tasks, Consistency Policy speeds up inference by an order of magnitude compared to the fastest alternative method and maintains competitive success rates. |

|

Alexander Khazatsky*, Karl Pertsch*,..., Kevin Lin, ..., Sergey Levine, Chelsea Finn Robotics Science and Systems, 2024 We introduce DROID, the most diverse robot manipulation dataset to date. It contains 76k demonstration trajectories or 350 hours of interaction data, collected across 564 scenes and 84 tasks by 50 data collectors in North America, Asia, and Europe over the course of 12 months. We demonstrate that training with DROID leads to policies with higher performance and improved generalization ability. We open source the full dataset, policy learning code, and a detailed guide for reproducing our robot hardware setup. |

|

|

Kevin Lin*, Christopher Agia*, Toki Migimatsu, Marco Pavone, Jeannette Bohg Autonomous Robots, 2023 (Special Issue: Large Language Models in Robotics) We propose Text2Motion, a language-based planning framework enabling robots to solve sequential manipulation tasks that require long-horizon reasoning. Given a natural language instruction, our framework constructs both a task- and motion-level plan that is verified to reach inferred symbolic goals. |

|

Open X-Embodiment Collaboration IEEE International Conference on Robotics and Automation (ICRA), 2024 (Best Paper Award Finalist) We introduce the Open X-Embodiment Dataset, the largest robot learning dataset to date with 1M+ real robot trajectories, spanning 22 robot embodiments. We train large, transformer-based policies on the dataset (RT-1-X, RT-2-X) and show that co-training with our diverse dataset substantially improves performance. |

|

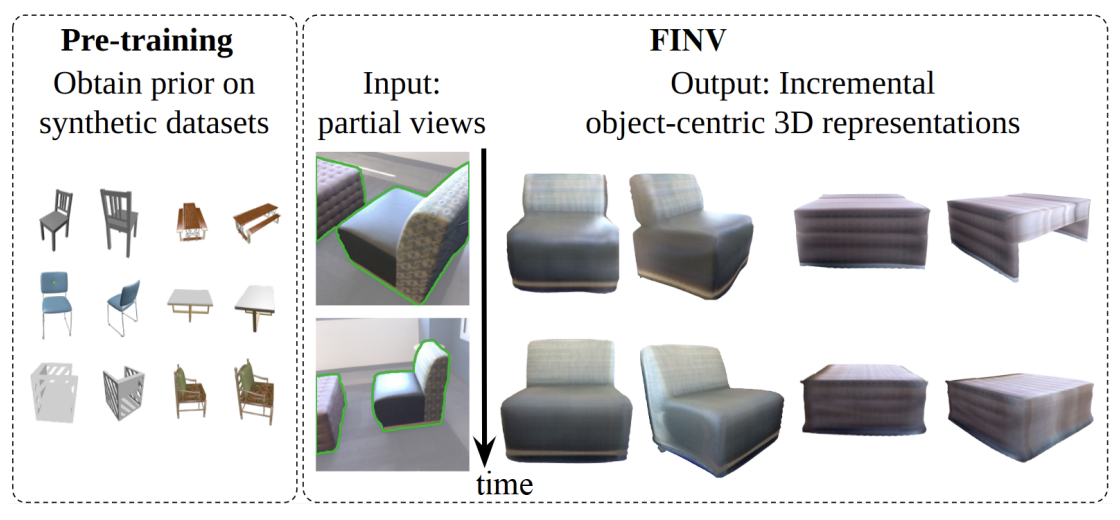

Fan-Yun Sun, Jonathan Tremblay, Valts Blukis, Kevin Lin, Danfei Xu, Boris Ivanovic, Peter Karkus, Stan Birchfield, Dieter Fox, Ruohan Zhang, Yunzhu Li, Jiajun Wu, Marco Pavone, Nick Haber International Conference on 3D Vision, 2024 (Oral Presentation) We propose a framework that combines the strengths of generative modeling and network finetun-ing to generate photorealistic 3D renderings of real-world objects from sparse and sequential RGB inputs. |

|

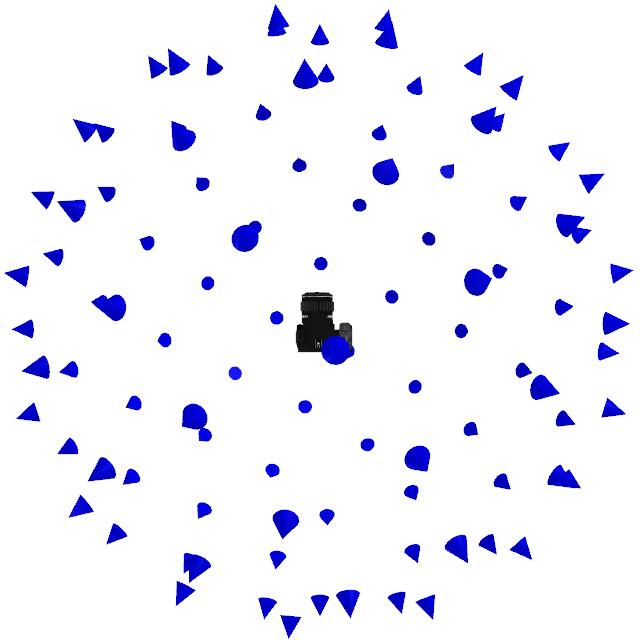

Kevin Lin, Brent Yi Robotics Science and Systems: Workshop on Implicit Representations for Robot Manipulation, 2022 (Spotlight Presentation) We motivate, discuss, and present a study on the problem of view planning for radiance fields. We introduce a benchmark, active-3d-gym, for evaluating view planning algorithms for radiance field reconstructions and propose a simple solution to the view planning problem based on radiance field ensembles. |

|

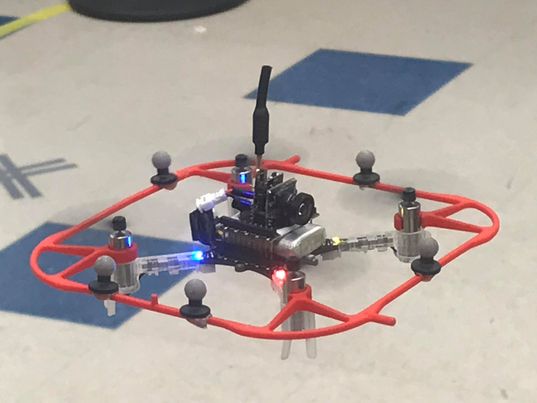

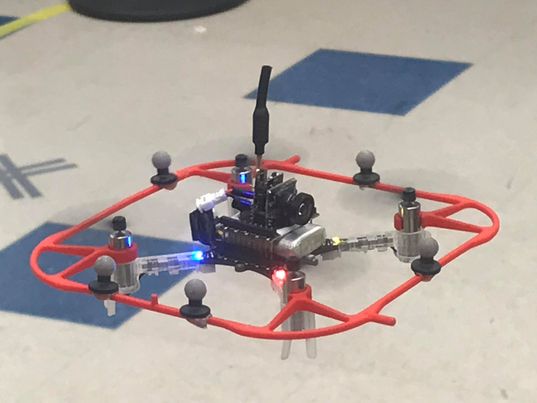

Kevin Lin, Brian Huo, Megan Hu We study how aerial robots can autonomously learn to navigate safely in novel indoor environments by combining optimal control and learning techniques. We train our agent entirely in simulation and demonstrate generalization on novel indoor scenes. |

|

E Vinitsky, A Filos, Kevin Lin, N Liu, N Lichtle, A Dragan, A Bayen, R McAllister, J Foerster NeurIPS: Workshop on Emergent Communication, 2020 Occlusions present a major obstacle to guaranteeing safety in autonomous driving. Our key insight is that, sometimes, AV can get information about occluded regions by inferring over the actions of other agents on the road. We demonstrate that AVs can use this inferred data and level-K reasoning to avoid collisions with occluded pedestrians and drive in a pro-social manner. |

|

|

|

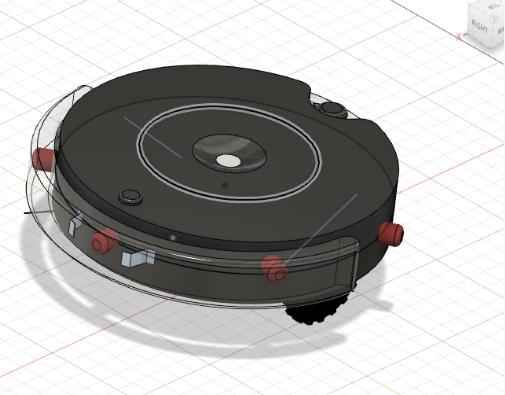

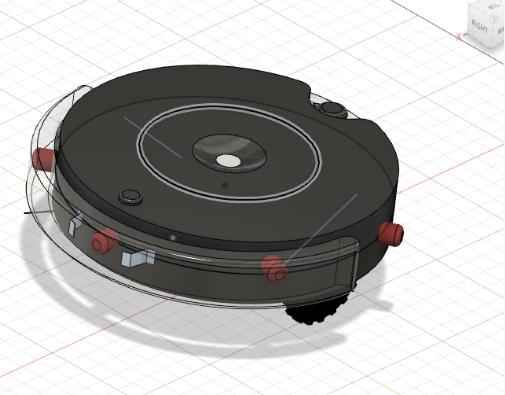

Won 1st place in UC Berkeley robotics course's final project Envisioned and built a prototype vision-only robot vacuum using Visual SLAM to compete with LiDAR based Roomba models. |

|

Developed an open-source tool for applying ML techniques to autonomous vehicle driving policy discovery |

Feel free to share your feedback here |

|

Teaching Assistant, CS 221 Fall 2023, Fall 2024

Introduction to Artificial Intelligence Instructors: Percy Liang, Dorsa Sadigh |

|

Undergraduate Student Instructor, EE 126 Spring 2021

Probability and Random Processes Undergraduate Student Instructor, CS 170 Fall 2020Efficient Algorithms and Intractable Problems Undergraduate Student Instructor, CS 70 Summer 2020Discrete Math and Probability Theory |